I have previously written two posts about the cost of using Amazon AWS, one way back in May 2010 and one in August 2015. In both cases the costs involved with running a rack full of servers 24/7 365 was much more expensive than we could do it ourselves hosting equipment in a data center.

I have previously written two posts about the cost of using Amazon AWS, one way back in May 2010 and one in August 2015. In both cases the costs involved with running a rack full of servers 24/7 365 was much more expensive than we could do it ourselves hosting equipment in a data center.

A project I’m working on is growing and we have a couple options that we are considering. Our volume increases a lot during USA working hours, specifically Monday – Friday 8am – 1pm. We are considering the following two options:

1) Provision Amazon EC2 servers during peak times, so 5 hours a day and 5 days a week.

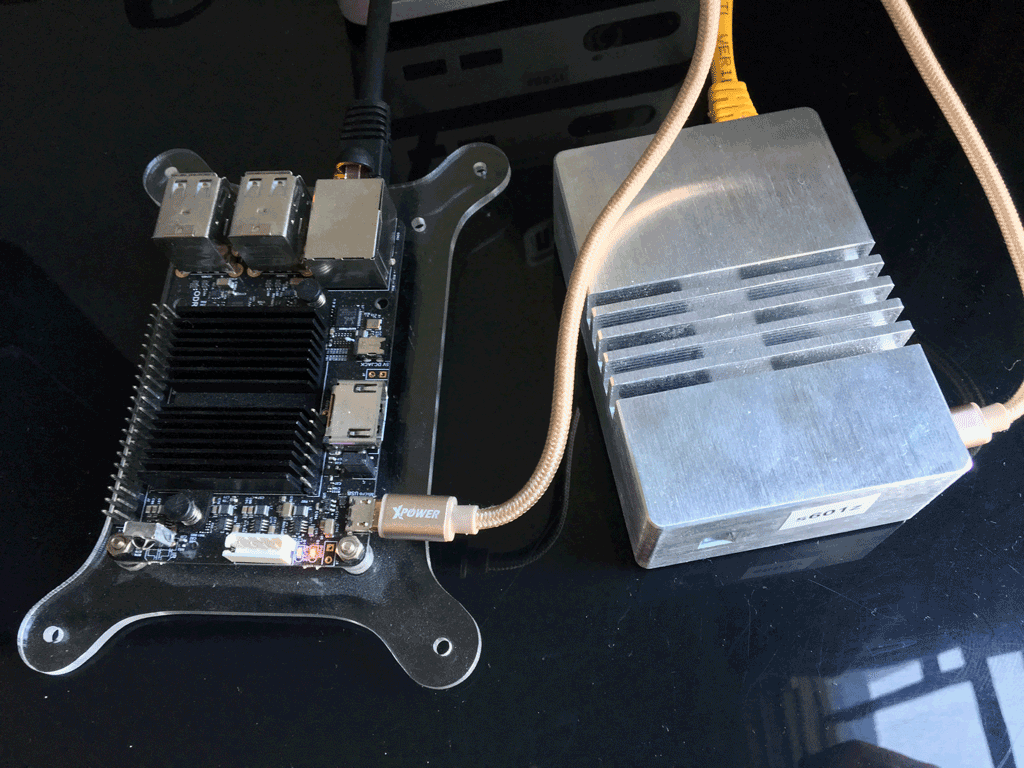

2) Purchase a bunch of Intel NUC DC53427HYE computers. These is not server grade hardware, but they do have vPro technology which allows them to be remotely managed.

We have purchased 1 of these little Intel NUC machines for testing, purchased new in the box for $180 – plus shipping so about $200 for the base unit. We need 4gb of RAM and 120gb of disk space.

We have purchased 1 of these little Intel NUC machines for testing, purchased new in the box for $180 – plus shipping so about $200 for the base unit. We need 4gb of RAM and 120gb of disk space.

Configured the NUC is costing about $290 ($200 NUC + $60 SSD + $30 RAM). 10U at our data center with 20 amps of power and 30Mbps bandwidth. We should easily be able to power 30 of these little NUC machines with 20 amps of power. The data center space will cost $275 per month, for 24/7 operation with 30Mbps of bandwidth based on 95th percentile usage.

Lets run some CPU benchmarks on these little guys and see how much Amazon cloud hosting would cost us for the same compute power running only 5×5. It should be a lot cheaper to go the Amazon route… but lets find out.

The pricing / servers have not changed much since I ran the benchmarks last year. These benchmark were run in Amazon US West (Oregon) using Performance Test 9.0, which is a different version than last year so the scores are a bit different.

Instance Type:

c4.large – 8 ECU – 2 vCPU – 3.75gb RAM – $0.193 per Hour

Windows Server 2016

CPU Mark: 2,925

Disk Mark: 791 (EBS Storage)

Intel NUC DC53427JUE – Intel i5-3427u – 4gb RAM

Windows 10 Pro

CPU Mark: 3,888

Disk Mark: 3,913 (Samsung 840 EVO 120gb mSATA SSD)

Wow, the benchmark performance for the Amazon instance is quite a bit lower than the basic Intel NUC.

The total CPU score of 30 of these Intel NUC servers would would be 116,640. To get the same computer power out of the c4.large instance at Amazon we would need to boot up 40 instances.

Lets run the costs at Amazon. We would need 40 servers at .193 cents per hour. We need them for 5 hours a day, 20 days a month. So the math looks like this.

40 servers x 0.193 = $7.72 an hour

$7.72 x 5 hours = $38.60 per day

$38.60 x 20 days = $772 per month

In addition we need to take into consideration the load balancer, bandwidth and storage. We are using an estimate of 3,300 GB per month inbound and outbound to get our estimated pricing, this is only a fraction of the total bandwidth we could theoretically move on our 30Mbps line from the data center.

Load Balancer Pricing

$0.025 per hour = 5 hours x 20 days = 100 hours = $2.50 per month

$0.008 per GB = $0.008 x 3,300 = $26.40 per month

Bandwidth Pricing

3,300 gigs outbound bandwidth = $297 per month

Storage

Sorry Amazon, your pricing is so complex I can’t even figure out how much the disks are going to cost to provision 120gigs per machine for the 5 hours x 5 days operation…

So a rough cost is going to be somewhere in the range of $1,100 per month for only 5 hours a day, 5 days a week.

Lets look at a 1 year investment.

Amazon = $13,200 per year

Buying 30 Intel NUC servers and hosting them.

Intel NUC x30 = $8,700

Hosting = $275 x 12 months = $3,300

Total to buy and host 1 year = $12,000 for 1st year

Paying for the hardware up front, running the servers 24/7 still comes out cheaper than going to the cloud with usage of only 5 hours a day and 5 days a week.

Lets lengthen the time to 2 years.

Amazon = $26,400

Own & Host = $15,300

And the 3 year view?

Amazon = $39,600

Own & Host = $18,600

Incredible, I thought going to the cloud would be more cost effective than buying and hosting our own equipment when we only need the extra capacity for a limit amount of time per day.

I have previously written two posts about the cost of using Amazon AWS, one way back in

I have previously written two posts about the cost of using Amazon AWS, one way back in  We have purchased 1 of these little Intel NUC machines for testing, purchased new in the box for $180 – plus shipping so about $200 for the base unit. We need 4gb of RAM and 120gb of disk space.

We have purchased 1 of these little Intel NUC machines for testing, purchased new in the box for $180 – plus shipping so about $200 for the base unit. We need 4gb of RAM and 120gb of disk space. I was recently doing some troubleshooting of email delivery on an Exim server.

I was recently doing some troubleshooting of email delivery on an Exim server. It is a 2U rack mount fat twin. It contains hot swap modules with two Atom-based computers. In total, you get 8 Atom computers and 24 2.5 inch hot-swap hard drive bays.

It is a 2U rack mount fat twin. It contains hot swap modules with two Atom-based computers. In total, you get 8 Atom computers and 24 2.5 inch hot-swap hard drive bays. It is February 2016. I am trying to find Windows drivers for an ‘antique’ Intel RIAD controller, the ESB2 SATA RAID card.

It is February 2016. I am trying to find Windows drivers for an ‘antique’ Intel RIAD controller, the ESB2 SATA RAID card. I am attempting to boost the performance of some spinning disks by using

I am attempting to boost the performance of some spinning disks by using