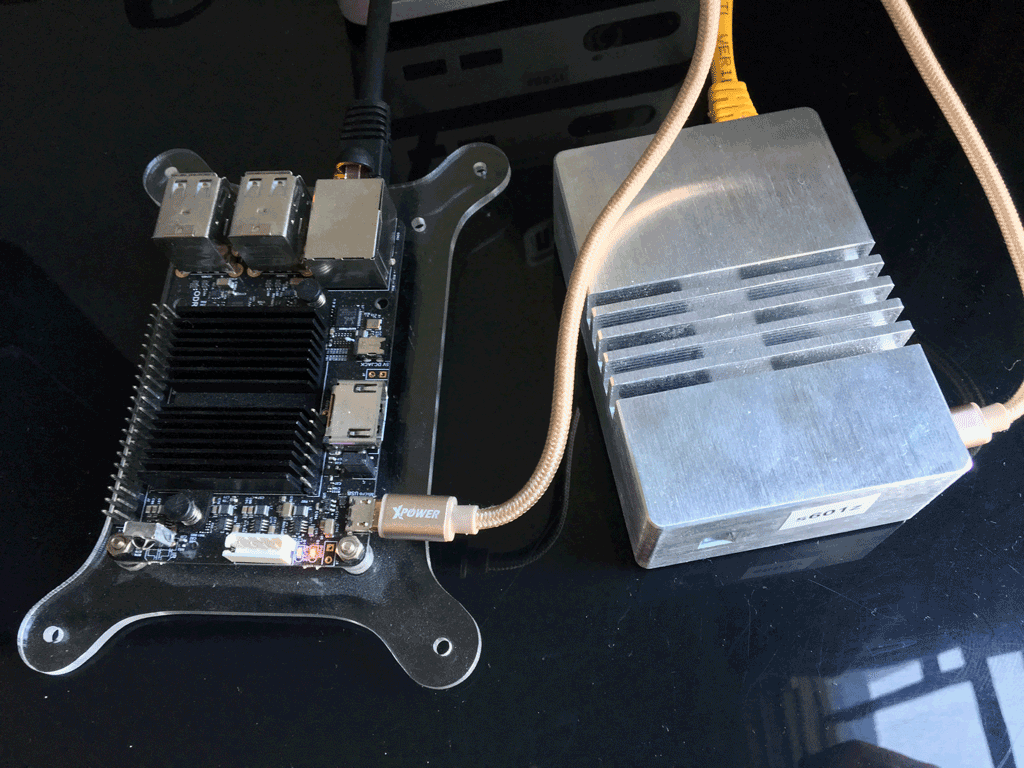

I really like the ODROID-C2 by HardKernel, they are very reliable, have a quality network card, lots of RAM and really perform incredibly well when used with eMMC flash storage.

I have three of them and all have performed so well I am going to deploy them to data centers to act as DNS servers.

Need a case for that… hmmm… how about Cogent Design’s all aluminum case. Keep the little board very safe and cool by replacing the stock heat sink with the entire case to act as the cooling system.

I’m going to run a couple ‘very scientific’ tests to measure the performance of the case vs. the stock cooler that comes on the board.

Tested side by side with an ambient room temperature of 21.5°C (70.7°F), there is no fan in the room. Both units are powered from the same USB power supply.

The OS is Armbian Debian (jessie) 3.14.79 on both nodes, there is nothing active on the machines just the base OS.

After about 25 minutes running at idle the stock cooler comes in at 40°C and the aluminum case comes in at 36°C. Not a massive difference, but it is cooler. To the touch both the cooler and the case are warm but not too hot.

Heat It Up

I used the Linux stress program to apply a bit of heat to the computers. The following command was run for about 1.5 hours to see how high the temperatures would climb.

stress --cpu 4

Amazingly both computers did not heat up very much. They both stayed much cooler than I expected they would with the stock cooler climbing to 60°C and the aluminum case only reaching 55°C. Perhaps the stock cooler climbed only to 60°C because it itself is not in a case, but I have no case to test it with.

Testing Against Each Other

Since I have three of these devices, I purchased three cases. While the temperatures observed above are low, does the case really keep the machine cool enough?

Maybe I used too much thermal paste, or maybe not enough?

I performed a few tests with all cases, using different amounts of thermal compound. The result was they all performed the same.

Suggestions For Possible Improvement

One difference I see between the aluminum case and the stock CPU cooler is surface area. I’m no math mathematician but I suspect the stock cooler may have a similar amount of surface area because of all fins despite it only measuring 43cm x 33cm.

From looking at the case I would suggest too Cogent Design for a v3 of this case, consider keeping more material from the lid of the case. It seems to me that more material is being removed from inside the lid than needs to be.

Then increase surface area, produce ridges for fins on the outside of the case where possible. Even if a ridge was only 1mm in depth it adds a lot more surface area for cooling – just like traditional CPU coolers. No one produces a CPU cooler that is a solid block of aluminum, maximizing the surface area maximizes the cooling performance.

As a solid block of aluminum the CPU is heating up the aluminum block but without more surface area the ability dissipate that heat is limited.

I really noticed the difference between the stock cooler and the aluminum case when the stress test ended. The stock cooler was able to lower its temperature much quicker, which I attribute to the surface area of the stock cooler.

Would I Purchase Again

Would I purchase this case again if I was looking for another case? Yes, I would purchase the case again – hopefully version 3 will be available should I need a few more in the future.

I have previously written two posts about the cost of using Amazon AWS, one way back in

I have previously written two posts about the cost of using Amazon AWS, one way back in  We have purchased 1 of these little Intel NUC machines for testing, purchased new in the box for $180 – plus shipping so about $200 for the base unit. We need 4gb of RAM and 120gb of disk space.

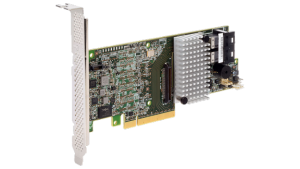

We have purchased 1 of these little Intel NUC machines for testing, purchased new in the box for $180 – plus shipping so about $200 for the base unit. We need 4gb of RAM and 120gb of disk space. It is February 2016. I am trying to find Windows drivers for an ‘antique’ Intel RIAD controller, the ESB2 SATA RAID card.

It is February 2016. I am trying to find Windows drivers for an ‘antique’ Intel RIAD controller, the ESB2 SATA RAID card. I am attempting to boost the performance of some spinning disks by using

I am attempting to boost the performance of some spinning disks by using